Misinformation and Disinformation: An international effort using behavioural science to tackle the spread of misinformation, Observatory of Public Sector Innovation, 19 October 2022

Executive summary

Social media platforms are powerful tools to enhance public awareness and access to information. Yet coinciding with the growth of these platforms is the spread of mis- and dis-information. Misinformation is false or inaccurate information not disseminated with the intention of deceiving the public. Disinformation, on the other hand, is false, inaccurate, or misleading information deliberately created, presented and disseminated to deceive the public.

Mis- and dis-information can have profound effects on the ability of democratically-elected governments to serve and deliver to the public by disrupting policy implementation and hindering trust in institutions in both advanced and developing countries.

Driven by a joint objective to better understand and reduce the spread of misinformation with insights and tools from behavioural science, the OECD, in partnership with behavioural science experts from Impact Canada (IIU) and from the French Direction interministérielle de la transformation publique (DITP), launched a first-of-its-kind international collaboration. This exercise in sharing of best practices between governments and academics initiated a study conducted in Canada using a randomised controlled trial – embedded within the longitudinal COVID-19 Snapshot Monitoring Study COSMO Canada.

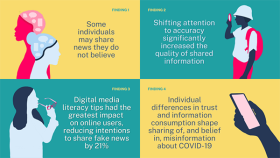

This study, tested on 1,872 people across Canada, assessed the impact of two behaviourally-informed interventions – an attention accuracy prompt and a set of digital media literacy tips – on intentions to share true and false news headlines about COVID-19 on social media.

While both behaviourally-informed interventions were found to be effective, digital media literacy tips reduced false headline sharing 3.5x more than the accuracy prompt, and overall demonstrated a 21% decrease in intentions to share fake news online compared to the control group (see graph).

Overall, results from this experiment suggest that user-end, scalable, behavioural interventions can significantly reduce sharing of false news headlines in online settings. The results offer compelling avenues for empowering individuals to make active choices about information quality while prioritising and preserving citizen autonomy. Behavioural tools can provide pre-emptive and complementary approaches that can be deployed alongside system-level approaches that regulate, set standards, or otherwise address false information online. The results of this study are an important step in bolstering our understanding of the impact of mis- and dis-information and testing feasible, effective, and collaborative solutions.

Summary of findings

Key insights

- A comprehensive policy response to mis- and disinformation should include an expanded understanding of human behaviour

- By empowering users, behavioural science offers effective and scalable policy tools that can complement system level policy to better respond to misinformation

- International experimentation across governments is vital for tackling global policy challenges and generating sustainable responses to the spread of mis- and disinformation.

The paper concludes by outlining additional opportunities for research and urging governments to increase efforts to conduct policy experimentation in collaboration with other countries before enacting policy.

Are you curious to learn about targeted applications of this work? Reach out to Chiara Varazzani, OECD Lead Behavioural Scientist, and find out how we can work together.